Legal AI Knows What It Doesn't Know Which Makes It Most Intelligent Artificial Intelligence Of All

Casetext's Co-Counsel thinks like a good junior lawyer, which is exactly what lawyers need from AI.

One thing witnesses struggle with is that a critical part of telling the truth is knowing what you don’t know. Most people want to please the lawyer asking the questions. It’s not only the human thing to do, but they find themselves thrust into an alien legal proceeding and issued dire warnings about not telling the truth. They want to be helpful. But a speculating witness is, fundamentally, a lying witness. Sometimes it’s hard to convey that to normal people, but when they confidently guess at stuff they don’t know for sure, they’re not doing anyone a service. In fact, they’re usually screwing everything up.

One thing witnesses struggle with is that a critical part of telling the truth is knowing what you don’t know. Most people want to please the lawyer asking the questions. It’s not only the human thing to do, but they find themselves thrust into an alien legal proceeding and issued dire warnings about not telling the truth. They want to be helpful. But a speculating witness is, fundamentally, a lying witness. Sometimes it’s hard to convey that to normal people, but when they confidently guess at stuff they don’t know for sure, they’re not doing anyone a service. In fact, they’re usually screwing everything up.

I thought about how many times I had to have this talk with a witness while I watched Casetext CEO Jake Heller and Chief Innovation Officer Pablo Arredondo demonstrate their latest offering, Co-Counsel. Because of all the skills it brings to the legal AI game, the most impressive is the ability to know what it doesn’t know.

Amid all this hype about ChatGPT and “generative AI” and clickbaity headlines either lamenting or trumpeting the decline of lawyers before Bing’s unflinching contempt, most folks are overlooking how often these tools are just plain… wrong. It’s been described ChatGPT as “Mansplaining As A Service” and that sounds about right.

The Global Legal News You Need, When You Need It

It can hallucinate up an answer to queries and explain it just confidently enough to lead everyone astray. Arredondo relayed a story about testing an AI system that gaslit him about a case he litigated — right up to sending him “proof” in the form of a made-up URL link. If the challenge for legal AI over the course of the next year is solving the “garbage in” problem of running to bad data, then the metachallenge is getting AI that won’t even try it.

As for the first challenge:

To tailor general AI technology for the demands of legal practice, Casetext established a robust trust and reliability program managed by a dedicated team of AI engineers and experienced litigation and transactional attorneys. Casetext’s Trust Team, which has run every legal skill on the platform through thousands of internal tests, has spent nearly 4,000 hours training and fine-tuning CoCounsel’s output based on over 30,000 legal questions. Then, all CoCounsel applications were used extensively by a group of beta testers composed of over four hundred attorneys from elite boutique and global law firms, in-house legal departments, and legal aid organizations, before being deployed. These lawyers and legal professionals have already used CoCounsel more than 50,000 times in their day-to-day work.

The user interface resembles a standard chatbot. From there, the system can perform a number of specific legal tasks. But, importantly, tasks that attorneys might actually want an AI to perform.

Sponsored

Stuck Drafting A Tough Brief? This Tool Can Help.

What Do Millennials Think Of Law Firm Life?

What Do Millennials Think Of Law Firm Life?

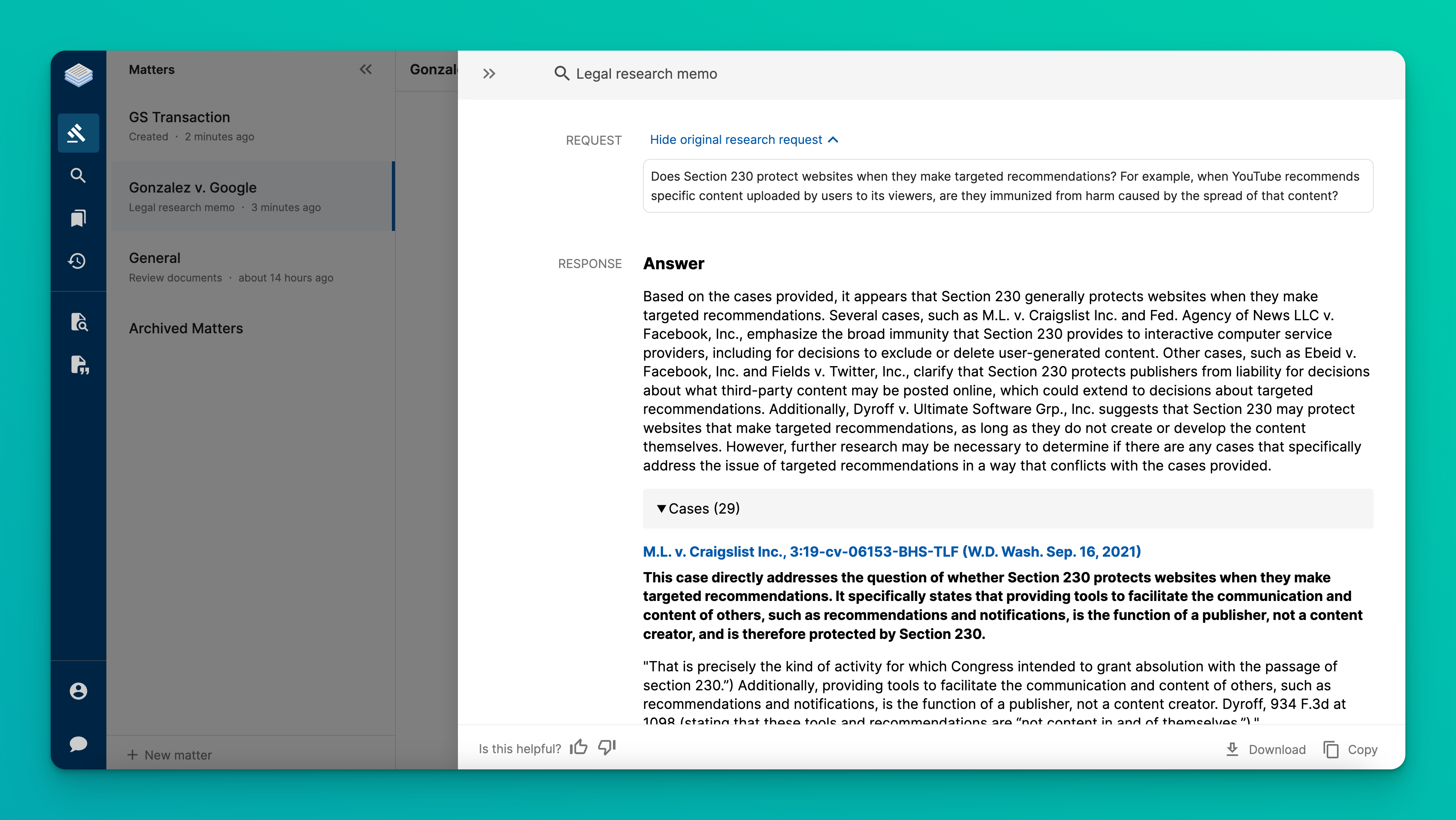

Because it’s Casetext, obviously it can aid in legal research:

Takes the natural language question, breaks it down, runs the query, summarizes the results AND gives the attorney access to the cases. Not a surprising feature necessarily, but it betrays a critical distinction between serious AI and the fun and games. The parlor trick is “generative” AI, giggling that it can write some output. Far more important is the AI’s ability to recognize and break down the request. Co-Counsel is crafting a number of research queries off that question, just like one would expect a junior lawyer to perform. And then it’s taking the results and reading those and drawing conclusions to guide the attorney when digging into the results.

But, while cool, the other tasks that Co-Counsel tackles impressed me more.

Taking a deposition? Ask the system to come up with some questions on some key topics and it’ll spit out a ready-made outline. The example run for me involved a hypothetical bribery case and it had questions prepared with the names of the parties that asked about the elements of the cause of action. It’s not the end of the prep, but it’s lopping an hour of drudgery off of it.

Sponsored

Legal Knowledge Management To Drive Dealmaking

The Global Legal News You Need, When You Need It

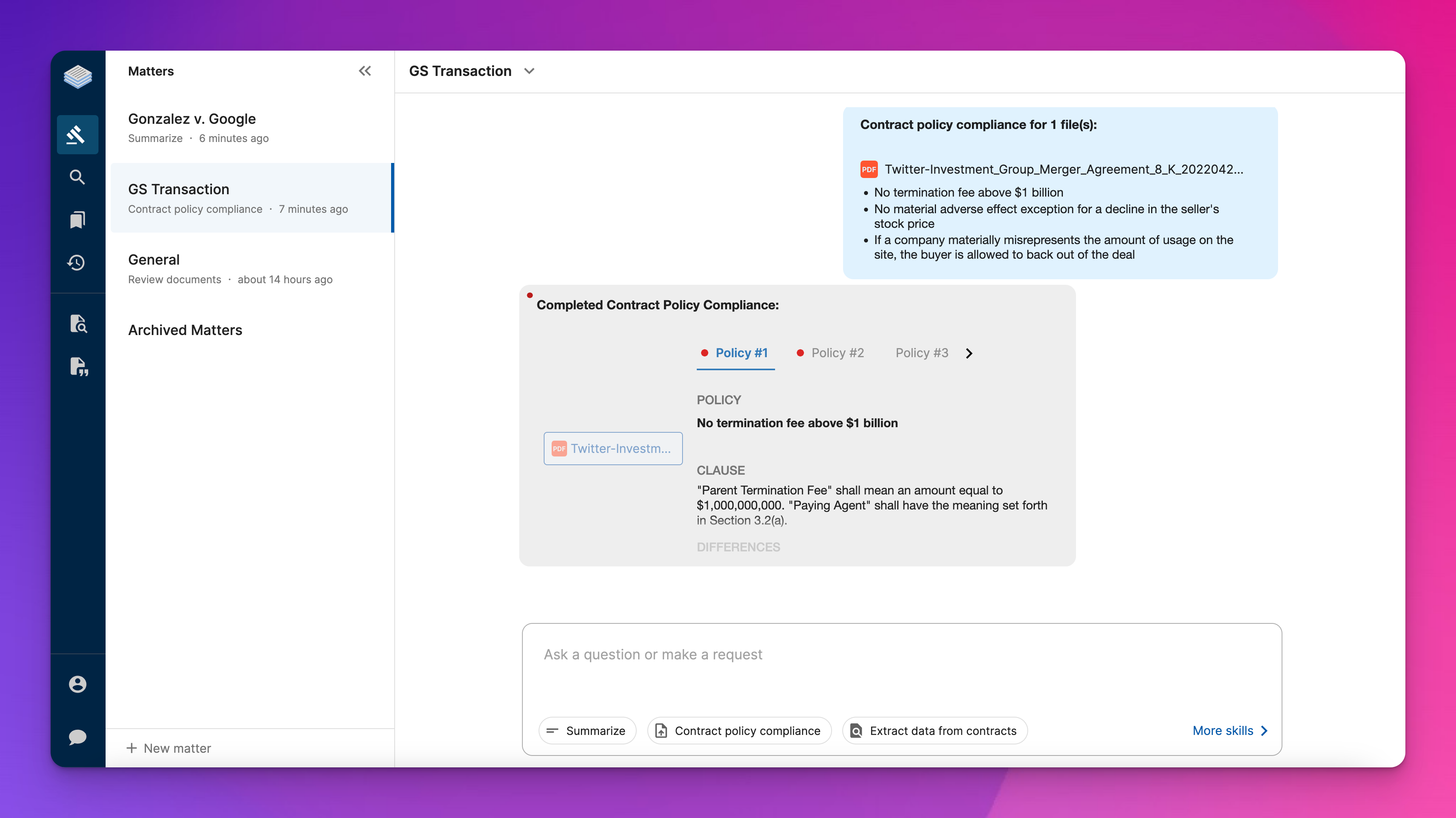

The transactional lawyer isn’t left out either. Co-Counsel can scour a draft agreement to ensure it meets requirements that you select and it’ll not only figure it out, but suggest a redline where the draft falls short.

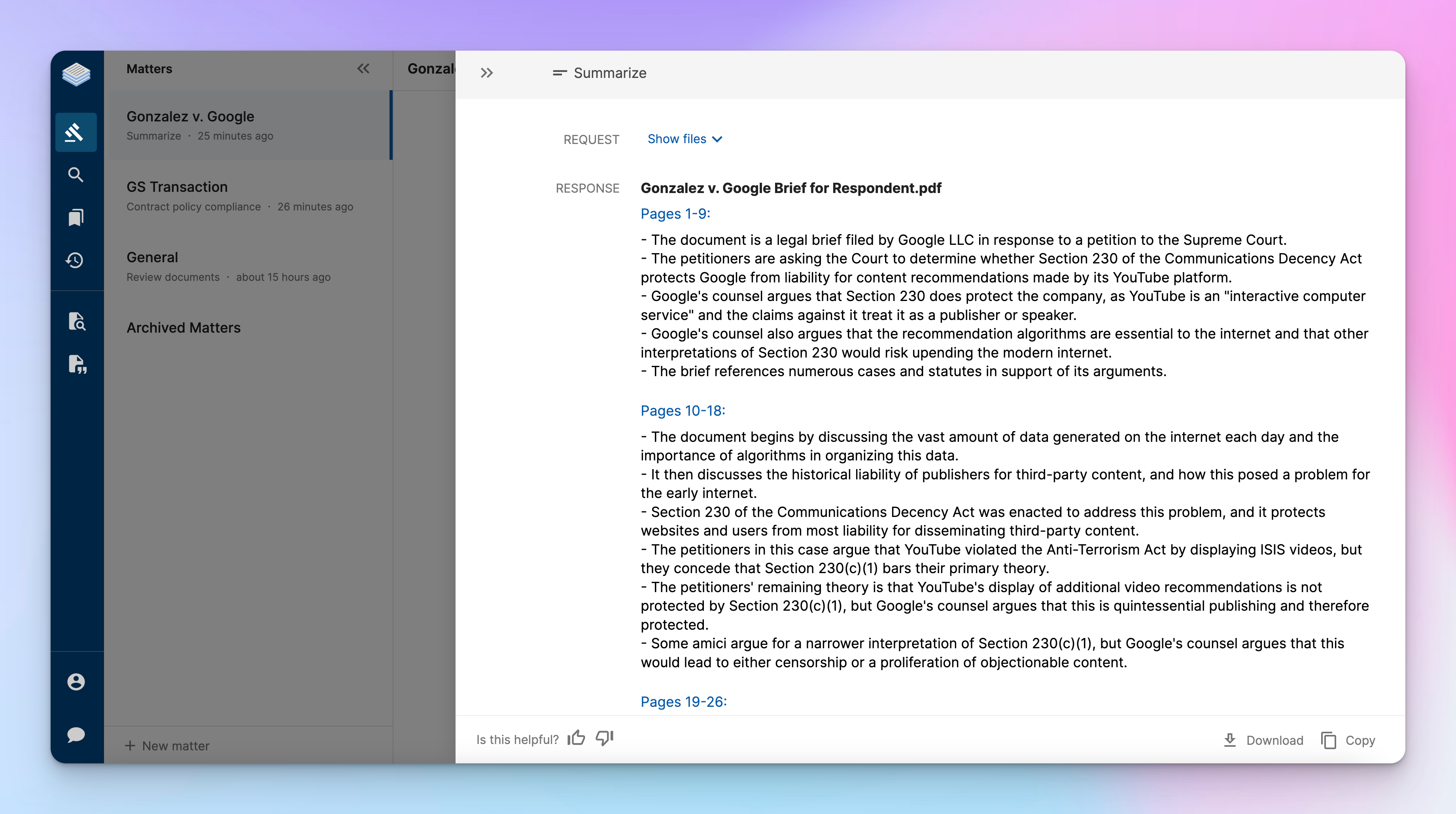

Catching up on the facts of the matter? Feed it a bunch of documents and it’ll provide a summary to keep the lawyer from wasting valuable time digging through each paper. If you’re looking for something more specific, ask the system to analyze a universe of documents with an eye toward specific issues:

This was just dragging and dropping documents into the UI and it’s handing over a Reader’s Digest version.

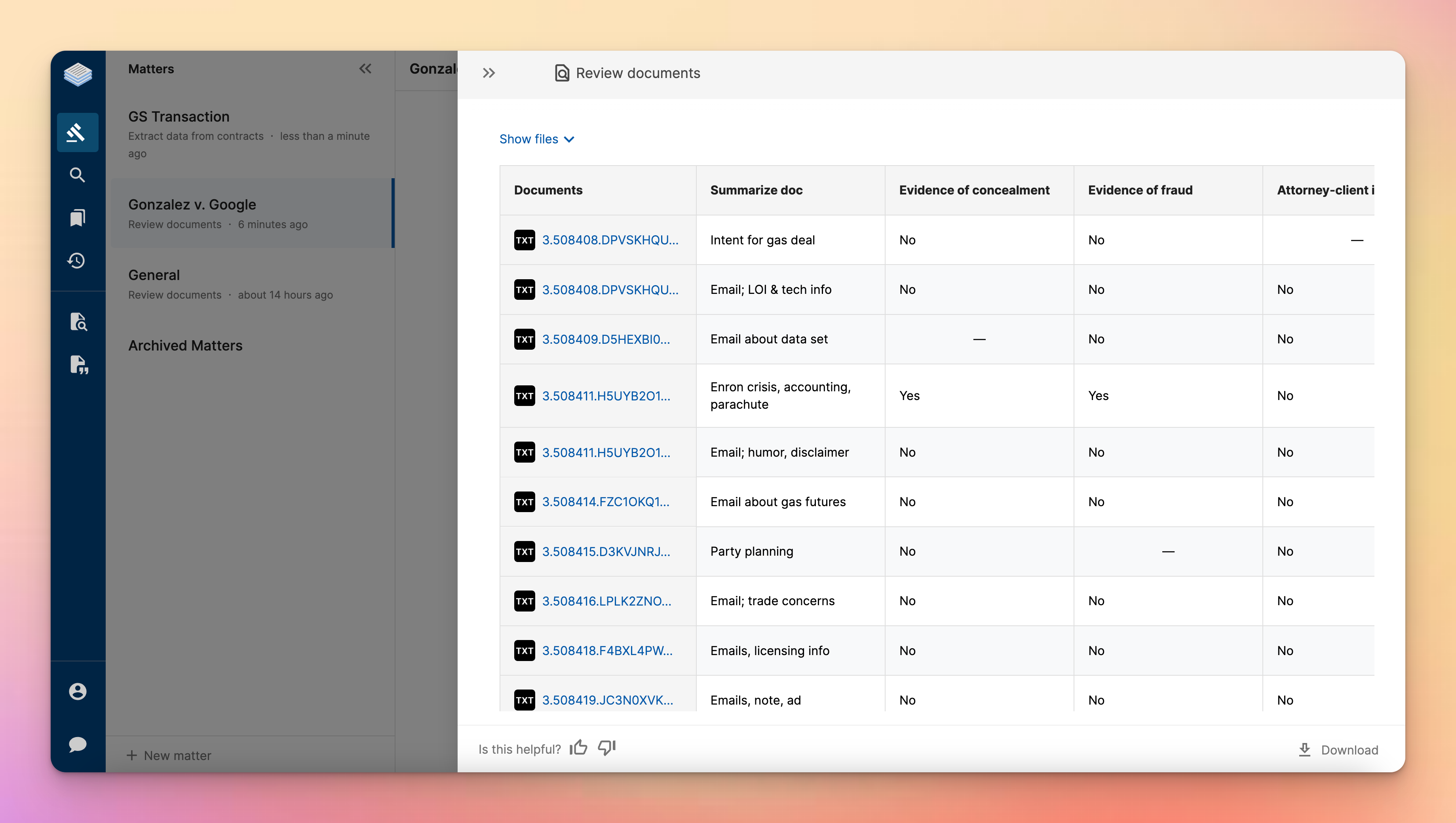

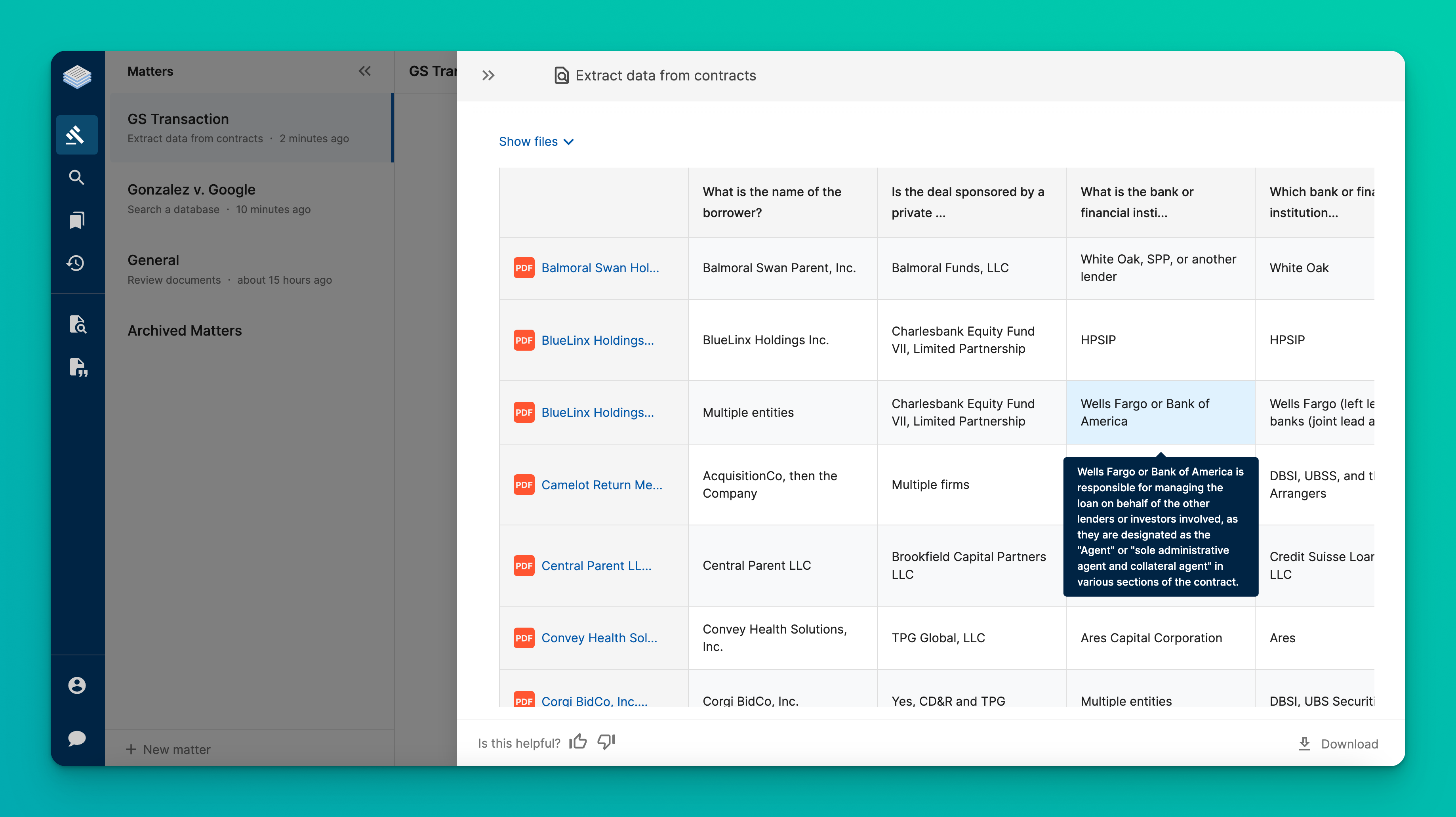

For something more robust than a summary, the user can point the system toward a bunch of documents and ask specific questions about the contents to get something like this…

Casetext ran me through a slightly different example than the one pictured to allow me to choose my own queries. We examined this body of Obama speeches for insights into whether or not he told a joke, alluded to a tragedy, and to ascertain his views on immigration. But for illustrative purposes the image gets to the heart of it. The system will not just relay the answer, but tell you if it can’t be certain and then, if the user hovers over the answer, it’ll explain why. In my demo, if it saw text that might be funny but wasn’t clearly a laugh line — like a dad joke — it would flag it and offer its reasoning and provide a link so the user can jump to the passage and check it out.

Let’s face it, lawyers don’t want and aren’t going to depend on an AI’s scan of the document any more than they rely on a summer associate’s. We’re in “trust but verify” territory as Arredondo put it. Attorneys need a system that quickly flags “yes, no, and maybe” and then provides a fast way for the experienced lawyer to look over the merits of each call.

That’s where AI provides the most value to lawyers. It’s not about replacing the human that makes the decision, it’s about replacing the write-off hours sending a junior off to slap together drafts. And like that junior, the AI isn’t going to know everything. Unlike that junior, Co-Counsel is going to transparently provide the attorney with a full grasp of the bases of what it does and does not know.

Which might sound like a knock to a non-lawyer who, like the witnesses described at the beginning, think that they’re being helpful when they volunteer their own speculation based on incomplete evidence. But it’s more helpful to be accurate.

Joe Patrice is a senior editor at Above the Law and co-host of Thinking Like A Lawyer. Feel free to email any tips, questions, or comments. Follow him on Twitter if you’re interested in law, politics, and a healthy dose of college sports news. Joe also serves as a Managing Director at RPN Executive Search.

Joe Patrice is a senior editor at Above the Law and co-host of Thinking Like A Lawyer. Feel free to email any tips, questions, or comments. Follow him on Twitter if you’re interested in law, politics, and a healthy dose of college sports news. Joe also serves as a Managing Director at RPN Executive Search.